Beyond the ‘Metaverse’: Empowerment in a blended reality

The Vanity Fair logo in the corner gives an aura of authority to the satirical YouTube video which features a sweatshirt-cloaked celebrity with periwinkle hair and chunky chain necklaces—an uncanny blend of pop musician Billie Eilish’s body and mannerisms and comedian Pete Davidson’s unmistakable face and grin. “A year touring on the road can really take its toll on you,” reads the video’s caption, which juxtaposes the aforementioned deepfake—AI-synthesised audio visual content designed to appear authentic—against a real video of a younger Eilish.

This article could end here, with a comment about how realistic deepfakes have become, and a cautionary warning that while these technologies can be used creatively and even for good, deepfakes are not all fun and games. The majority of deepfakes is pornography made without consent, and as we’ve seen earlier this year with a fake video showing Ukrainian president Volodymyr Zelensky asking his soldiers to surrender to Russia, deepfakes can be used maliciously to create confusion and spread destabilizing disinformation on the global stage.

However, this article won’t end here.

Instead, consider this: a wall of faces of people of apparently African descent—the faces are uncannily smooth and smiling—and the name of the digital collection is “META SLAVE.” In this case, the blockchain-based, unique digital artifacts known as NFTs (non-fungible tokens) are Black people’s images, part of a digital auction block to buy and sell. It is a chilling virtual marketplace reminiscent of 19th-century public slave auctions. It recalls the atrocity of the European transatlantic slave trade targeting Africa, the fear of the artificial in a television show like Westworld, and the horror of enslavement in the film Get Out.

The physical world and the virtual are blending in ways that go far beyond deepfake technologies—and in both worlds, news consumers are being bombarded by inaccurate or irrelevant information

Is this a new phenomenon? Arguably, it’s not. During World War II, artist John Heartfield used manipulated photographic images (photomontage) to create political satire. People have long been making unlicensed and unauthorised baseball cards to collect—sometimes just to pay homage to their favorite players, but also to deceive unsuspecting online buyers.

How are we, the public, to construe this kaleidoscope of fake and manipulated media from these older forms up through digital forms still on the horizon?

Blended worlds

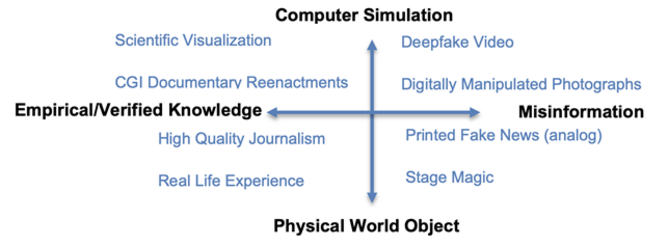

I often use the term “technologies of virtuality” to describe computer-based media that integrate imaginative content with physical-world content, ranging from photoshopped images to deepfake videos, virtual and augmented reality (VR/AR), and NFTs such as described above.

All these technologies exist along two axes, one indicating the degree of computer-based simulation (virtuality) and the other indicating the degree of misinformation (truthfulness).

I prefer the term “physical world” rather than “real world” because the experiences people have online, whether negative bullying or positive learning are real, with impacts on our behaviours, mental health, and more. A tragic example of this is how the suspect in a mass shooting, called by police a hate crime, that killed 10 people at a Buffalo, New York supermarket in May laid out his plans in an online manifesto that was rife with disinformation, some in the form of memes referencing “the Great Replacement,” which falsely posits that white U.S. citizens are being “replaced” by people of color. It’s not even the first instance of internet memes, circulating in far-right digital spaces, being used to justify or prelude physical world violence. The Buffalo shooter went on to live-stream the attack, claiming that “some people will be cheering for me” closing the circle of real and virtual.

Technologies of virtuality

Two aspects of recent technologies of virtuality that are different from some earlier, non-digital technologies are the ways they are circulated—ranging from social media to blockchains; and how much they use our senses of sight, sound, and sometimes touch, smell, or even taste, to make us feel that the virtual, simulated experiences are not merely simulations.

When these technologies are pervasive, indistinguishable from physical world reality, and perhaps even commodified, the threats are multiplied—ranging from exploitation of individuals to sowing conflict.

I suggest that four primary mechanisms to combat the negative impacts of technologies of virtuality are:

- Digital Forensics: image and audio processing software that analyses content to detect computational manipulation.

- Verification: use of encrypted digital “signatures” to authenticate legitimate content.

- Policy: use of industry guidelines and government legislation to regulate deepfake production and prohibit using them to mislead the public.

- Public Literacy: raising public awareness of the primary aims, uses, and methods of deepfakes; providing the public with means to discern misinformation in media.

The role of media literacy

While all four approaches are important, public literacy and nurturing critical thinking are crucial. We need to help empower people in situations where digital forensics, legislation, and verification fail or are unable to keep up with the evolving technology. Our online project, supported by the Abdul Latif Jameel World Education Lab, Media Literacy in the Age of Deepfakes, a learning module that aims to arm students with critical thinking skills that they can use to better understand and combat deepfakes (https://deepfakes.virtuality.mit.edu/), is an attempt to do so.

I believe strongly in the capacity of individuals to be discerning creators, consumers, and distributors of media. Whether you laugh or shudder at the next comedic deepfake, and as you immerse yourself in the so-called metaverse, or purchase a unique digital artwork—I invite you to take some time to reflect on the nature, implications, and possibilities entailed by the technology of virtuality at hand.

Pakinam Amer contributed to this article.